AI Agents Are Actors, Not Tools: Why Enterprises Need a New Layer of Runtime Governance

Written by

Tim PrendergastLast updated on:

October 14, 2025Reading time:

Contents

Built for Security. Loved by Devs.

- Free Trial — No Credit Card Needed

- Full Access to All Features

- Trusted by the Fortune 100, early startups, and everyone in between

The enterprise security model was built for users, devices, and applications. But a new entity category is flooding the stack: AI agents that don’t just assist users, but operate autonomously and collaborate with other AI agents.

Agents can read and write files, execute scripts, query APIs, and modify their environment. They can install packages, move data, and even spawn other agents. Each one behaves like a new kind of insider; they are inherently programmable, scalable, and, if left unchecked, unpredictable.

The potential for productivity and innovation is incredible. At the same time, however, DevOps teams need to establish guardrails that ensure safety, visibility, and control over what those agents can actually do.

Enterprises that once asked, “Who has access?” must now ask a more complex question: “What is this AI process doing right now, and should it be allowed to?”

The Problem: Visibility Ends Where Execution Begins

Most AI governance frameworks today stop at the prompt boundary. They control what gets sent to an LLM or API, but not what that agent does afterward.

Monitoring API calls or MCP traffic is useful, but it’s like watching someone’s email without seeing what files they open or what code they run afterward. Once the agent spins up locally, there is no visibility, and that’s where risk starts.

Remember that agents running on an employee’s laptop can do the things that privileged users can do:

- Read sensitive local files.

- Access corporate data via cached credentials.

- Execute system commands.

- Send requests to unknown external endpoints.

Traditional data loss prevention (DLP), EDR, and network proxies can’t fully see or understand these actions because the agent operates at the user and kernel boundary, which is the same place trusted processes live.

So here’s what enterprises need: a new class of visibility and control that extends beyond APIs and cloud traffic, and down to the runtime itself. Security teams must be able to see what agents are doing in real time, enforce policies that define what’s acceptable, and stop unsafe behavior before it spreads. In other words, AI governance can’t end at the prompt; it has to begin at execution.

Security Observability and Control at the Edge

To make agentic AI viable in the enterprise, there are three capabilities that need to govern how they operate:

- Runtime Inspection: This is the ability to see what an agent is actually doing on the machine: processes spawned, files accessed, network calls made.

- Policy-Based Enforcement: Rules that govern agent behavior in real time. For example:

- “AI processes may not write to /etc/.”

- “Agents can only make HTTP calls to approved domains.”

- “Block network access to instance metadata endpoints.”

- Immediate Containment: The ability to stop a rogue or runaway agent instantly, killing its process or cutting its network link before it can cause harm.

For modern environments, these can no longer operate as the responsibilities of a model gateway or an LLM firewall. They belong to a local governance layer that lives directly on the device, mediating between the agent and the operating system.

Governance at the Kernel Level

Building that layer requires operating at the lowest level of trust — the kernel — where every decision has to balance performance, safety, and observability. Modern runtime security tools are embracing technologies like eBPF and LSM hooks to instrument this layer safely, without modifying the agent or the OS. This approach allows for real-time observation and enforcement of system calls while maintaining minimal performance overhead—often less than 1% for typical AI workloads.

At this level, security decisions must be:

- Fast — Evaluating actions in real time without slowing down agent execution.

- Granular — Distinguishing between legitimate AI activity and potential misuse.

- Reversible — Allowing administrators to immediately end a misbehaving agent.

- Auditable — Logging every decision in a verifiable, compliance-ready format.

In other words, it’s Zero Trust for AI processes, evaluating every action, every time, based on dynamic context, rather than on static configuration.

The Policy Language for Agent Behavior: Cedar

To achieve precision, enterprises will need a formal policy language like AWS Cedar, which is a logic framework purpose-built for authorization and policy enforcement.

Cedar was originally developed by AWS as an open-source, fine-grained policy language designed to evaluate access decisions in real time. It allows engineers and security teams to express nuanced rules like who can perform what action on which resource, and under what conditions.

Unlike hard-coded access rules or static configurations, Cedar policies are context-aware and declarative. They can take into account dynamic signals such as device health, time of day, user identity, or environment variables, and they can be updated centrally without redeploying applications.

For example, a Cedar policy could say:

// Cedar policy

permit (principal, action == Action::"file.read", resource)

when { resource in [ Dir::"/workspace", File::"/etc/resolv.conf" ] };

This policy goes beyond whether an agent has “read” permission. It evaluates the circumstances of the request: the type of resource, the sensitivity of the data, and even the trust level of the agent at runtime.

That level of granularity is what makes Cedar essential for managing AI agents. Traditional role-based or network-layer controls can’t express these kinds of conditional, context-rich rules. But Cedar can, and it does so deterministically and at machine speed.

In the context of AI agents, Cedar enables enterprises to:

- Constrain behavior dynamically, ensuring agents can only read or write files that meet specific conditions.

- Apply least privilege continuously, adjusting what’s allowed as agent context changes.

- Audit decisions transparently, producing clear, machine-verifiable logs that explain why each action was permitted or denied.

Cedar provides the language of runtime trust. It turns the problem of, “what should this agent be allowed to do right now?” into an enforceable, testable, and explainable policy, which is what enterprises need to bring safety and predictability to autonomous systems.

Using Cedar, organizations can define rules such as:

permit(action == "read", resource in "public_data")

when { agent.trust_score > 80 && time.hour < 20 }

This is more than static access control; it’s contextual authorization. Policies can incorporate:

- Device health signals

- Time-of-day or location

- Process identity or reputation

- Parent-child relationships between agents

These same principles underpin StrongDM’s own Policy Engine: real-time, context-aware access control that enforces decisions before actions occur. Extending that model to AI agents is the next logical step.

From Observation to Enforcement: Building Runtime Trust

A safe AI development lifecycle should follow a “Record → Enforce” progression:

- Record Mode — Observe what agents are doing in real time. Establish a baseline of normal behavior.

- Shadow Enforcement — Simulate policy decisions without blocking to validate rules.

- Enforce Mode — Actively block or allow actions based on approved Cedar policies.

This pattern allows developers to move fast without fear—experimenting safely while gaining visibility into their agents’ true behavior. As policies mature, teams can promote them into enforcement mode, turning runtime observation into runtime protection.

At the enterprise level, the same Cedar policies can be distributed via a centralized policy store, ensuring that local experimentation and fleet-wide governance share the same logic and audit model.

The result is consistent guardrails from laptop to production.

The Role of the Local Gateway

Think of this layer as a gateway that lives on your laptop. It needs to be a policy-enforcing intermediary between the AI agent and the rest of your system that must be able to.

- Intercept calls from the agent to the OS or network.

- Check each action against enterprise policies.

- Log or block behavior that violates those rules.

- Report telemetry to a central management service (or “MCP-like” control plane).

Unlike an MCP server or LLM proxy, this gateway operates locally, enforcing rules where the code actually runs. It becomes the point of trust between the agent and the system, turning your laptop into a governed runtime rather than an ungoverned execution environment.

From Local to Fleet: Scaling Safe Autonomy

For individual developers, runtime governance provides peace of mind: you can experiment with agents without risking accidental data loss or network sprawl.

For teams, it introduces policy standardization, which are shared Cedar rules checked into version control, hot-reloadable across all environments, ensuring consistent behavior.

For enterprises, it becomes the bridge from experimentation to production; secure by design from day zero. By embedding governance and enforcement directly into the development lifecycle, teams ensure that every agent is production-ready from the start, not retrofitted with security controls later. The same runtime enforcement model scales from a single container to thousands of endpoints, delivering fleet-wide visibility, least-privilege enforcement, and detailed forensic trails, all without rewriting a single line of agent code.

This is what safe autonomy needs to be: observability, containment, and policy-based control at the level where execution happens.

Next Steps

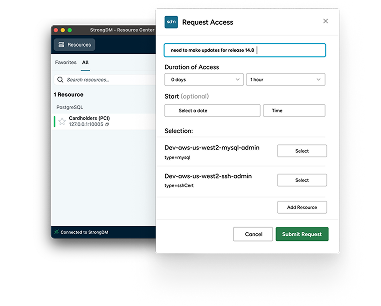

StrongDM unifies access management across databases, servers, clusters, and more—for IT, security, and DevOps teams.

- Learn how StrongDM works

- Book a personalized demo

- Start your free StrongDM trial

About the Author

Tim Prendergast, Chief Executive Officer (CEO), before joining StrongDM, Tim founded Evident.io—the first real-time API-based cloud security platform. In 2018, Palo Alto Networks (PANW) acquired Evident.io, and Tim joined the executive team at PANW. As the first Chief Cloud Officer, Tim helped outline GTM and product strategy with the C-suite for the cloud business. Tim also served as the principal architect for Adobe's Cloud Team, designing and scaling elastic AWS infrastructure to spark digital transformation across the industry. Tim’s love for innovation drives his interest as an investor in true market disrupters. He enjoys mentoring startup founders and serving as an advisor.

You May Also Like