How Hearst Eliminates DevOps Complexity -- An Architecture Review

Written by

Schuyler BrownLast updated on:

May 26, 2022Reading time:

Contents

Built for Security. Loved by Devs.

- Free Trial — No Credit Card Needed

- Full Access to All Features

- Trusted by the Fortune 100, early startups, and everyone in between

Hearst Eliminates DevOps Complexity with Automation

Jim Mortko is responsible for leading all Internet-based engineering and digital production efforts, along with ecommerce and marketing initiatives that support Hearst Magazines’ diverse units including 20 U.S. magazines, Hearst Digital Media, the Hearst App Lab and Hearst Magazines UK. He is credited with spearheading the launch of five internal systems, along with supporting the launch of more than 10 websites.

In this talk, alongside DevOps Engineer Manuel Maldonado, they discuss how Hearst eliminated DevOps complexity through automation and tooling decisions. Listen as they walk through their services and application architecture and download the slides now.

Talk Transcript

Jim Mortko 0:05

How's it going, I am painfully aware that we are the last talk and we are standing in the way of drinks for everyone. So I promise to go quickly.

I'm going to just cover the state of our dev ops team, and then Manuel's going to jump into some more interesting things. So media lessons are global platform here at Hearst. It's really for all the content creation, distribution, and monetization. It's used by the magazines division, as well as our TV division, you can see some of the stats here. I think the ratio is about similar to what I've been hearing here, in terms of the ratio between engineers and dev ops engineers.

And I don't think that the dev ops team could succeed in their role unless they really had their act together the way they do here. For us, I kind of liken it to like the drummer in a band, doesn't matter how good the rest of the band is, if the if the drummer off, no one's going to sound good.

And that's the same thing here. So some general practices. And then I'll see, that's what happens when you make something in Google Slides and then put it into PowerPoint. But anyway, architecture review, this is really the cornerstone of, of how things are built in a way that are scalable, are secure. This is where, before any code is written for any new service, for a re architecture of an existing service, it goes through this process. And this process is our architects, senior engineers, and a heavy representation from our devops team.

So that the technology is being chosen, the way things are going to be built, data flows, it's all evaluated ahead of time and steered correctly, we have a number of different technologies, but we tend to stay within a few of them just for sanity sake, like Postgres, and Redis, and Python and react. We're talking about the last talk was talking about, you know, putting the power of deployment in the engineers hands. So we're doing that. And we do that through Slack, every one of our services has its own slack room. Ahab, the bot knows who's allowed it to release software, and it's done through a set of commands. And similarly, in that process, some of our services that are really critical will invoke a load test, and that load test has to pass a certain threshold or code is not going to deploy. And then we've got some services that have complete automated testing done, this is a new initiative for us, we've only got maybe seven out of the 50 that we've got completely automated, but they can go live. As soon as something's committed, which is great. In terms of Kubernetes, we, we rolled around and AWS and we and we used fleet before Kubernetes, before we had Kubernetes views fleet for the containers, it was a nightmare.

And so now that we've got our Kubernetes running an auto scaling for both containers, and pods, like life is so much better. And this is just a shift in mentality. We've got Scrum teams that are really focused on velocity and getting their code out. And there's a real pride around getting things out into production when you say they will. But we've really instituted mentality that if something goes wrong, right after release, even if you don't think it's related, roll back first. We're not saving lives. But at the same time, a lot of people's jobs depend on the software, there's a revenue stream, and there's our credibility. So we've really tried to adopt this roll back first, find out what happened later. So, our escalation process, you know, we, we really want to ensure that our dev ops team is just not on the hook for anything that goes wrong.

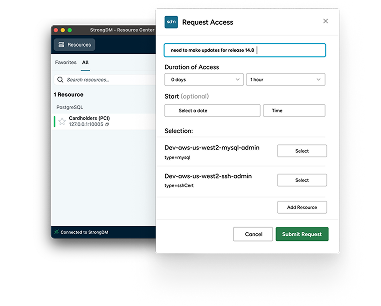

And so we've got a directory of every single engineer their contact information off hours, so that if they need to be contacted, they're essentially on call. I would just like to get a show of hands, who else has this practice in their company right now? Okay, so my director of DevOps said, I should take this slide out, because everyone's doing it wrong. And then the use of StrongDM app, you know, it's been, it's been really great for us. It's in terms of deep provisioning. It's been, you know, wonderful. And then there's that extra layer of security. And I'll turn it over, Manuel, alright.

Manuel Maldonado 4:34

Alright, so actually be talking a little bit about the state of affairs of our pretty much all of the tools that we use in DevOps in our team specifically. But first, a little bit of background. So for us or for our team, we actually manage all of our infrastructure in using ansible again, mostly, it's used to create Ansible is really just used to create a lot of our AWS cloud formation stack. So pretty much everything that's running in our infrastructures in AWS, most of what we create is all via cloud formation, again, mostly. So by that, I mean anything from networking, to high level s3, buckets, queues, database, it pretty much the entire thing.

Now, we also do communities this way. So we use communities on top of AWS, like many of you guys have heard today, it's also done using this set of manual scripts. Now also, we manage application life cycles somewhat through the scripts as well. But we also have some buds, like Jim mentioned, that manage the lifecycle of the applications, a deployment builds, setting environment variables, for example, that's all done through through our slack bots. But we also have some in house wrappers around some of these commercial products. So for example, around our communities clusters, we have wrappers around it for us to be able to interact with them easier. So the developers actually don't have direct access via QCTL. They just interact with that rapper, for example. And, sadly, there are still a few things that we do manually. Just it's the it's how it is, sadly, but because we have all these things, we have answer one side, we have some bots, we have some wrappers around commercial products, and then some manual stuff, we just don't have a unified or global view of what's actually going on currently running our clusters, or infrastructure for that matter.

So an example of that would be, let's say you have we have a software developer, or sorry, software engineer, that's actually going to go and create a new application. Very simple, simple example, they want to create a new app. So we the way through Slack, we have a bot say, hey, I want a new app. And the bot says yeah, it's cool is provision very, quite simple and straightforward. Not really, behind the scenes, that bot is really just going to get hub going to, you know, Kwame Travis, the wrappers around volt in in communities to make sure everything is set up for that application. So there's a lot of automation that happens behind the scenes. But suddenly that automation is actually on that bot. So all that heavy lifting is done on that bot. And again, it seems pretty straightforward. But that bot is really doing the heavy lifting. And the problem is what happens when you have a bot that does one thing, second bot or something else, or the we're somewhat moving away from one of the bots to another, but you have about doing your environment variables you have that are currently running, the ones that are setting up environment variables there in the cluster, you have one for new applications status, sometimes you have to run your own ansible scripts to do certain things, or even do coops to tell commands directly, or sometimes even have to go to confirmation to check out some stuff is really just too much. It really is.

So we decided to then try to fix the problem by splitting it. It's, it's pretty. I mean, it's a very basic engineering principle, you divide and conquer. So we realized, on one side, everything that's infrastructure, we decide to do it in one tool. And we're not going to touch too much today about it, we call it Forge, but it's essentially just our teams cops we build, we're building a tool that's essentially supposed to create an entire AWS infrastructure, all the networking and Kubernetes on top of it, that will leave a cluster, essentially just ready for our tools to kick in. And then on the other side, the one we're going to touch a little bit on today is appliance. So appliance is really focusing on all the other stuff that's application specific, which means creating applications, like I said, but we also have things like back, back back end services. So Redis caches databases, what happens if a developer one's one of those. We don't want to overload that. That that forge or that clubs, we want to make sure that we do it separately.

So let's go a little bit of a higher level and what appliance is supposed to be really, this is more of the the high level goal is really just to unify and coordinate all of our backups. So all these backups that we have right here, we want to make sure that appliance takes care of that. But on the applications perspective, nothing infrastructure related, really what we want is a single API that allows us create new apps, configure their, their environments, configure their back ends. So if we want a database to behind the scenes, create that cloud formation, template, run it, inject, automatically generate and inject random username and passwords and behind the scenes also make sure that those are sent in to vote. So not even not even asking even see them. They're automatically created a store a secret in bold approvals and validation. So if a developer really wants something that's super big, and we want to make sure that we approve it before, we went to get that as part of this API, really with the goal of allowing the devil team and engineering team to have like a self service endpoint, CLI API and a UI that allows provisioning of the applications, which are also would allow us dev ops team to kind of free our times to build more things like this, right.

A little quick overview of it, running it very difficult to see but essentially, I'm here just doing crow commands to check everything from an application perspective. I want their profile I want their, their what environments they have their deployed right now, what's configured, where are they currently running settings, with secrets hashed out, I want to make sure if they have inglis's setup, what back end services are running all that in one API. And like I said before, for me to do that, I had to go to seven different places, which is beautiful. But a little bit more about the technical details of it really what we decide to do with the MVP is where Python chat for the most popular so we decided to just do a Python farrakhan as the front end, pretty straightforward. For the MVP, specially we decided to do most of the integrations, we wanted to do read only access first meaning not created anything, but just query the entire global infrastructure because we wanted to fix the problem of not having a single view. But also, if we realized that we couldn't integrate some of these services, then we would fail fast, we realized what we can do this right now we have to do something else. So we decided to do the easier a poor approach first. And if the MVP was successful, then we go into future plans, which it was. And right now, like I said, it read those read only access to pretty much all of our managed environments, which is fantastic. Now for future plans. We want to create resources by resources. I mean, back in databases, queues, all that stuff that the application needs, we want to have the developers be able to access it. But I put a synchronously there is because since we're using cloud formation templates, that means that we actually have to put tasking and queuing so there's a lot of heavy everything that we have to do behind the scenes, we're actually going to be creating that.

We also want to enhance, enhance or replace some of our in house systems. So our bots they have a lot of logic in them, we want to take that logic out and put it into opinions. And that way, they bought just receives a request talks to the API, the API does heavy lifting. And that's about it. That way, we'll also make it easier for us to move from having multiple bots and just having one. And then finally having a web interface as well as a CI because we have developers or software engineers and leads that are actually much more comfortable in CLI, there are some that are much more comfortable in the UI. And to be honest, to see what's currently running in your environment, it's nice to have you I'd say, hey, there's my app, or three environments, or my databases that are running, how big they are. So stuff like that. But that's really the future plan for for for our clients. It's really having, like I said, the whole point of it is trying to have a single place for the developments, the development teams to be able to go in and see their entire application. The entire Well, the global view of their application, that's the word. That's about it.

Any questions? For me? And Jim?

Q+A

Audience 13:16

The architecture of us, can you kind of describe a little bit of the process of how you actually run those like, is that sort of on demand like that, you know, if somebody has a feature, they want to do that, then say, hey, I need an architecture review. Or do you do that on a cadence

Jim Mortko 13:31

We have a weekly standing meeting scheduled, and it gets cancelled sometimes because maybe nothing is new is being you know, sort of ID eight it over. But you know, you're expected to bring data flow diagrams, you know, an explanation of what you're building what your problem you're trying to solve. database tables, and, and be specific about the tech you're using. So you know, and it's not like a session where everyone's looking at, you know, kind of beat down out on somebody. But it has turned into that in some cases. And I will also add that, prior to having that process, some of some of the things that were built, we're still suffering with right now, we're still looking to replace them, because they were just done so poorly. But now they're part of the infrastructure. The good thing about microservices is you can replace one thing at a time.

Audience 14:27

More on that architecture review, how did, how did you go from not having it to having it? And how did you get buy in from all the, I guess, management stakeholders, everything that kind of like make that process a thing, because we've tried a number of different ways to get all the parties in a room to talk about the best way to do things? And we just haven't had any luck getting there.

Jim Mortko 14:47

Yeah. That's a good question. So it really did come out of necessity. First of all, we had to get upper management's buy-in, but, but also in the beginning of this process. So there were three different teams working on this platform. And three different teams that were in different divisions at Hearst, and different divisions at Hearst maze will be separate companies. So this was kind of a of the first stab at corporate open source project, if you will. And things were getting out of hand in terms of stuff, just that we're just being built. And we had our single dev ops team being expected to, you know, support it, and we're just not going to work that way, we're not going to have things getting thrown over the wall, and just saying you guys have to support this now. And, you know, again, it came with a lot of, you know, talking about it, you know, raising up the problems that we had, you know, escalating, like I said to upper management, getting that kind of support. And, and also just getting the right group of people thinking about it, you know, and again, having DevOps at the table makes it so much more palatable for them to then go forward and say, I know what this application is, how it works, and how I can support it.

Audience 16:02

You mentioned automating the load testing, are you actually using a production like environment to get kind of like real world results? Or do you have some kind of like baseline from the previous build, and it just can't vary from the baseline?

Jim Mortko 16:23

We have a production like environment. It's a load test. Yeah, it's a little test environment. So it is specifically for that. And I mean, even the data that we have on all those environments tends to mimic production as well. So yeah. Hope that answers your question.

Audience 16:48

Hi, I was wondering because you were mentioning ansible and you said mostly in parenthesis. Yeah. So I was just wondering if you like notice, or if you had any challenges with answerable itself that may you like, right, this mostly part?

Manuel Maldonado 17:06

So I think the biggest problem was that we backed ourselves into that corner, we we wrote that tooling originally with ansible. And we just added too much to it. So for example, I'm relatively new to the team. When I joined, one of the things that I realized was, for the most part, I wouldn't know where certain variables that describe our our cluster came from. And it's, that's not necessarily pro-ansible. It's supposed to be a feature that you have default one way, but you can override it someplace else, it just becomes very difficult to understand where they're coming from, he really does. And then there were certain things as well as since we're actually using an older version of ansible, there were certain things that we couldn't do without trouble at the time. So we actually had to write our own Python scripts around that, which that's where the month mostly comes from. And eventually also netease interactions, like I said, we're not completely there. So we have to just call command line utilities from sensible that also where the mostly come from, so it's really just a mesh sometimes. Yeah.

Audience 18:22

Out of curiosity, how are you running your Ansible here? Sorry, your Kubernetes cluster? Are you using Amazon's managed Kubernetes service or something else?

Jim Mortko 18:35

We run in production, most of our community clusters are our own, we build them ourselves. We've expanded experimented with the chaos. But again, for the most part, it's just the ones that we build. Like someone mentioned in earlier talk. EKS wasn't available at the time. So we didn't really have a choice. He did it like from scratch using cloud formation templates, and ansible are using one of the managed tools that the first thing was inserted to interview a bit. Yes. So first time was from scratch. Now, in fortune, especially now we're, we're writing it ourselves. So we still use confirmation and whatnot, but to actually to to get the infrastructure ready, but to actually deploy the coordinating the coordinators, binary state, the worker nodes, q proxy, all that stuff. I think we're using QQADM now, but the infrastructure and stuff like that, we still use cloud formation for it.

Next Steps

StrongDM unifies access management across databases, servers, clusters, and more—for IT, security, and DevOps teams.

- Learn how StrongDM works

- Book a personalized demo

- Start your free StrongDM trial

About the Author

Schuyler Brown, Chairman of the Board, began working with startups as one of the first employees at Cross Commerce Media. Since then, he has worked at the venture capital firms DFJ Gotham and High Peaks Venture Partners. He is also the host of Founders@Fail and author of Inc.com's "Failing Forward" column, where he interviews veteran entrepreneurs about the bumps, bruises, and reality of life in the startup trenches. His leadership philosophy: be humble enough to realize you don’t know everything and curious enough to want to learn more. He holds a B.A. and M.B.A. from Columbia University. To contact Schuyler, visit him on LinkedIn.

You May Also Like