Token Security Podcast | Johnathan Hunt, VP of Information Security at InVision Talks Secure Code

Written by

Schuyler BrownLast updated on:

May 7, 2024Reading time:

Contents

Built for Security. Loved by Devs.

- Free Trial — No Credit Card Needed

- Full Access to All Features

- Trusted by the Fortune 100, early startups, and everyone in between

About This Episode

In this episode Max Saltonstall and Justin McCarthy are joined by Johnathan Hunt, VP of Information Security at InVision to talk about pen testing, bug bounty programs, and secure code.

Listen to the podcast:

About The Hosts

Justin McCarthy

Justin McCarthy is the co-founder and CTO of StrongDM, the database authentication platform. He has spent his entire career building highly scalable software. As CTO of Rafter, he processed transactions worth over $1B collectively. He also led the engineering teams at Preact, the predictive churn analytics platform, and Cafe Press..

Max Saltonstall

Max Saltonstall loves to talk about security, collaboration and process improvement. He's on the Developer Advocacy team in Google Cloud, yelling at the internet full time. Since joining Google in 2011 Max has worked on video monetization products, internal change management, IT externalization and coding puzzles. He has a degree in Computer Science and Psychology from Yale.

About Token Security

At Token Security our goal is to teach the core curriculum for modern DevSecOps. Each week we will deep dive with an expert so you walk away with practical advice to apply to your team today. No fluff, no buzzwords.

Transcript:

Max Saltonstall 0:00

Hi, my name is Max Saltonstall from Google.

Justin McCarthy 0:00

This is Justin McCarthy from StrongDM. Welcome to the token security podcast. And today we have a special guest.

Johnathan Hunt 0:21

Hi, everyone. This is Johnathan Hunt, VP of information security at InVision.

Justin McCarthy 0:33

Thanks for joining us. Today we’re going to be talking about secure code development and a whole bunch of other topics about staying secure as you ship your product quickly, which is a common theme for us here on the token security podcast.

Johnathan Hunt 0:44

So I’ve got some interesting views, right. I’ve done this a long time. And I’ve done this for a lot of different companies. And I feel like I’m the anti typical security expert that you’re going to find and I think part of that is what makes me successful in my jobs in the roles that I’ve been in.

Johnathan Hunt 0:58

You know, I don’t necessarily do things the way the industry says this is how it should be done. Or this is the way it should work. Or this is the way everyone else does it right. I mean, I get on calls with the biggest companies in the United States and actually across the world –InVision itself has 95 of the Fortune 100 companies use our product and 60% of the global 2000. So I’m on phone calls with customers three times a week, and it’s the biggest companies in the world. And I will confidently and respectfully argue points with them in a way that is just like, why do you want this? Why are you asking? What ultimately are you trying to achieve? You know, and they’re just like, Oh, you don’t have this one thing or something? And I’m just like, Okay, what do you trying to accomplish with that? What is the purpose of that, that you see is necessary for our service or something, right. And it’s just like, having that sort of discussion.

Johnathan Hunt 1:46

And I think that pen testing is good for exactly five days a year, right? I think for five days a year, I think you could have a well experienced consulting firm, do a pen test on you with a couple of guys over 40 hours.

Johnathan Hunt 2:01

And they can say, “Hey, this is what we found. And this is what we’re able to identify internal network, external network application, whatever they’re doing?” and say, Hey, this is what we found. And as of right now, this is where your risks or your vulnerabilities lie? Where the threats to your organization lie.

Johnathan Hunt 2:19

So that’s great and all until the next day, 24 hours later, you push code out. And now, what are you doing for the next 51 weeks of the year? So people rely on pen tests as meaning that oh, I had this pen test done, I fixed the four things they found. Now I’m secure for 52 weeks.

Johnathan Hunt 2:38

And I think that is a significant flaw in thinking, I don’t know where that theology ever gained momentum or where people felt comfortable with that I never have. And now fortunately, that organization, that governing body has determined at two pen tests a year will now mean that you’re secure for 52 weeks.

Max Saltonstall 3:00

So Jonathan, when you think about making sure that the next code that you release is secure? What are the key principles that you apply for your teams?

Johnathan Hunt 3:10

So that’s an interesting question, we’ve got a couple different approaches to ensuring that we properly secure the code prior to production deployment. We have a team is specifically dedicated to application security. That team performs your static analysis scans, they perform your dynamic analysis scans, we also have some tooling in the house that will run scans locally on developer laptops, as well as implemented within the CI CD pipeline that runs at another check on that code.

Johnathan Hunt 3:48

What I’ve found, I think the best approach at ensuring your code is secure, is to take that responsibility almost on to your group versus trusting and ensuring that developers are doing what they need to do to push out secure code. This is something I believe in for a really long time. And this is something that I actually try to educate security professionals on and try to teach and train different companies that I consult with and explain to them that this is one of the most remarkable failures in understanding how code is secured and understanding how developers approach secure coding developers themselves.

Johnathan Hunt 4:26

If you think about their roles, and you think about what their sort of their goals are, and what their performance reviews are based on, and what their quarterly bonuses or annual bonuses are based on, it’s not ever based on how secure your code was, right? It’s almost exclusively based on how quickly you deployed, how many projects did you finish, sometimes it’s about closing tickets and closing bugs. But if you think about that, the reward almost works in opposite of pushing out more buggy code to create more tickets than to create more remediation. So to mitigate those and there to close more tickets and order to. So if you think about it until our industry sort of gravitates toward rewarding our developers and software engineers to secure code, it’s unlikely that that is going to catch on.

Max Saltonstall 5:16

Can you just fire the ones that release insecure code? Does not fix the problem?

Justin McCarthy 5:21

Actually you need to have a team at the end of the day.

Max Saltonstall 5:25

But you have new developers, right? Isn’t that how it works? Now you’re just going to fire him again.

Johnathan Hunt 5:30

It’s true. That is true, it’s inevitable that everybody likely releases insecure code, right? If you think about everyone knows of the top 10, right. But what people don’t really know is that there’s over 600 forms of coding flaws and vulnerabilities and injection flaws. And everybody knows the top 10, because they’re the most common and there’s a little bit of overlap in the in the 600. I mentioned, there’s some overlap there with like injection flaws and stuff like that. But chances are even if as software engineer things are releasing secure code, I think what’s important is to understand that if it’s just a snippet of code, that they end up merging right into production, we’re merging in the master, right?

Johnathan Hunt 6:12

That doesn’t mean that now dynamically as the application is compiled, that it didn’t create a vulnerability somewhere further down. It could break off an occasion, it could pre-create insecure session tokens. I mean, any of these things can happen as a result of releasing and implementing new code, especially when you have teams of hundreds and hundreds of software engineers.

Justin McCarthy 6:33

So you mentioned taking responsibility. So in this case, this is the security team may or a security focused aspect of the engineering team, taking responsibility for doing some of the analysis, the static analysis of the dynamic stuff, and just application security in general: if you had to focus on one practice within that group, what’s your desert island practice? So the first one you would set up and the last one you would let go of within that team?

Johnathan Hunt 6:57

I think the most bang for your buck probably comes — the last thing I would let go is probably a vulnerability scanner itself. Now, my team, and I think most people’s teams are multi talented. We actually have software engineers, who were not AppSec people to begin with, on the team, because they simply can read and write code, as good as anyone in the company.

Johnathan Hunt 7:31

So there’s a lot of manual discovery, it helps to have someone that can speak that language that can say this is exactly how you fix it said, this is why it’s a bad thing. And this is what you’re doing, actually give examples and demonstrate that to the software engineers, because they are a software engineer, right? Very good software engineers some of the best in the industry, right that we pay really well to have to help secure our code. But that’s one person reading hundreds of thousands of lines of code that’s not scalable, right?

Johnathan Hunt 8:00

So vulnerability scanner, I think itself, whether it’s a static or dynamic, I prefer to use both, that’s going to give you the most return, and is definitely going to identify the most number of vulnerabilities and quick fashion, it’s still with those results, you’ve got to train someone, someone has to understand how to use that tool, how to configure that tool, maybe run, whether you’re running authenticated scans, or an authenticated scans are being with a move laterally across your application or services, or to read open source libraries are components or dependencies, or there’s a lot of tuning and a lot of configuration going into that tool, you can run it out of the box, again, you’re not going to get the best results, but you’re going to get something.

Johnathan Hunt 8:46

I mean, if you want to know day one, if you have no visibility into the security of your source code today, you can spin up that tool, run it within five minutes and immediately see these glaring problems, you’re going to find the most obvious things right away, that’s going to give you an immediately and let into knowing you know, well, I’ve got these high, like a sequel injection directory reversal or clear text authentication credentials being passed or whatever. But you’re going to find immediately there’s some definite return there.

Justin McCarthy 9:09

You mentioned, these groups are running, let’s say you’ve got the opposite group, you’ve got them running the scanning tool. Anytime you have another group running something that sort of happens after a predecessor group has finished their work, you end up in workflow questions. I also heard you mentioned CI and CD. So you’ve obviously got a build pipeline going on. Can you talk a little bit about whether this is a continuous process, whether this is a gating process on each release, whether it’s something that happens before or after release —

Johnathan Hunt 9:34

It’s pretty complicated processes at InVision only because the amount of teams that we have and how rapidly we deploy code. So we’ve got around 24 or 25 teams at InVision and the team’s themselves deploy at least once a day, if not multiple times a day. So around 50 or so times a day, we are pushing out new code into production.

Johnathan Hunt 9:58

The way the process works is we have a static analysis scanner that sits locally on all software engineers laptops, every piece of code every line of code that they write their supposed to scan this prior to committing that to the repos. Once it’s in the repos, once we get ready to deploy and merge in a master at that point that runs again, right, the same tool runs within a CI CD pipeline, after we’re doing, QA testing, and all these other things that run also is an automated tool set, it runs again, at that point, it notifies us or notifies them right of vulnerabilities resident, now we can choose to block that we can choose to say, hey, if it’s a critical vulnerability, or a high severity vulnerability, we’re going to disable or block the push right to production that comes with some complications, right.

Johnathan Hunt 10:53

So right now we have an exception process built in, where you can override that it’s just push to production. Now, obviously, everyone’s notified of any exceptions granted, and then we immediately take measures to mitigate or remediate that vulnerability. Depending on the severity of that.

Justin McCarthy 11:11

That’s like even though you potentially have an activity that from a product managers perspective, could slow down the release pipeline, it sounds like 50 times a day, it’s not slowing it down too much. So it sounds like you actually have the tools necessary to balance sort of speed and security and maybe week to week, even you could decide, you know, we need to elevate this particular type of finding, and either focus on it more or block for releases, but it seems like you can actually hit the gas and the brake on either security or features.

Johnathan Hunt 11:37

I think that’s the great thing about the product that we use, remaining vendor neutral, I think there’s a couple products that will do this in your deploy pipeline. And that’s accurate, Justin, right, you can turn on blocking and turn off blocking. The tooling itself is pretty phenomenal, I mean, there’s a great dashboard and alerts us on how many vulnerabilities is detecting a week or a day or an hour, or it lets us know the category and the customization of that vulnerability, the severity, it tells us what teams are deploying it. This is all part of a multi faceted approach at source code security at InVision, it’s not just running this one tool or running a dynamic analysis scanner.

Johnathan Hunt 12:21

These are tools that we’re putting in place that’s primarily for identification, notification, right? We create a JIRA ticket to prioritize those and assign those out and measure those against our SLA is of how quickly we resolve critical highs, mediums and lows, that’s only one component to ensuring you’re doing the best that you can to secure the code within your environment. So we also have the educational approach, which is where what we do is on a monthly basis, we actually release a newsletter, we have a security team secure organization newsletter that goes out monthly, and each month, we highlight a vulnerability. And it’s usually the top vulnerability of the month. And we go into great detail. And it’s really amazing. It’s like a page long. And we’d be with like, this is the vulnerability we found 740 times this month or whatever, right? And then we go into I’m just kidding, InVision’s never had a vulnerability.

Johnathan Hunt 13:11

By the way, this, everything is fine. This is the vulnerability we found X number of times this month. And then we actually put a screenshot of the code into newsletter. And then we talked about why this is bad, and what can happen if this is exploited and stuff like that. And then we educate them on this is how you fix it. This is what you should do in the future. And this is like it only takes a minute. And continuous education really, I think is as important as the tooling itself. I mean, you teach a man to fish or do you give them a fish?

Johnathan Hunt 13:45

If we keep just fixing their code and fixing their code and fixing their code, we’re not making any ground, we’re not getting any better. I mean, we’re staying secure. But that’s the problem. You’re filling holes that developers are continuing to dig. So what you want is for them to dig less holes. So that’s one approach.

Johnathan Hunt 14:03

We also have a pretty strong secure development training platform and house that has a lot of material around the OS, it’s a little more than this is another thing that this financial regulatory body and some others have mandated is that in addition to like security awareness training that you have to do once a year for 15 minutes, which makes you automatically secure. I feel I feel really secure. Every time I feel so secure. I feel secure for about 45 seconds. I think after everyone takes their security awareness training, in the net elated feeling just melts away. And it’s like we’re not security more.

Johnathan Hunt 14:41

That’s amazing. I didn’t think about that. Starting after this podcast. It’s a daily requirement for everyone that my company, great way to start off the day with a 15 minute video.

Justin McCarthy 14:51

It’s just a morning meditation ritual.

Max Saltonstall 14:53

I usually do yoga and stretch while I’m listening to it.

Justin McCarthy 14:56

Fishing.

Johnathan Hunt 14:58

That’s amazing. Yeah, so in addition to that, right, they also require you to secure code development or training for your developers, which people also typically do 15 minutes, once a year, obviously, that’s not fixing anything, we’re still continuing to get breached, we’re so continuing to lose 600 trillion records a year of whatever, right people’s identity and credit card numbers.

Johnathan Hunt 15:19

So what we do is a rigorous training program that includes presentations in person, we have these monthly or quarterly off sites now with these quarterly off sites with each group within the company. But engineering itself will do a quarterly off site. And part of that off site will be some sort of training exercise around, you know, code development or security within the source code, or we pick a topic, maybe it’s on proper authentication, or tokenization, or something along those lines, right, pick a topic and continue to educate the team or the organization continuously over time.

Max Saltonstall 15:55

It sounds like a pretty rigorous preventative curriculum that you’ve got there. What about when you have to be responsive? Either because, you know, there was a breach or an attack or compromised or because someone has notified you that, hey, I found this thing, and I don’t think it’s doing what it should be doing, then what do you do?

Johnathan Hunt 16:12

Well, that obviously adds a bit more stress. I think, like most companies, right, we have what you would call like a tiger team, we have an incident response process. And what’s great about our incident response process, and I don’t know if a lot of companies do this, but what I found to be really valuable for us at companies I’ve been with his security is part of any sort of incident response, right is their response to this isn’t about data breaches isn’t just about security. I mean, it can be any sort of an incident and how someone deployed and updated feature, and it broke something right, it broke something within the product, we call an incident.

Johnathan Hunt 16:47

So we have these incident meetings where everyone’s jumping in on a conference call, we got the right people in the room. And the same thing happens for like a some sort of security event or incident, we call an incident organization or anything area managers and engineering, senior management, engineering is notified. We all jump in on the channel.

Johnathan Hunt 17:05

Also, you guys don’t actually know this, I should point this out, this might be important. InVision is a 100% remotely distributed company. We don’t have an office, we have almost 1000 employees, we have scaled to 1000 people with everyone working out of their own home. It was one thing that I think InVision is quite unique, right? And the reason why this is important is for this context alone, we can’t just all go jump into conference room, right with our laptops. Like that’s how we used to do it. That’s how I’ve done it at previous companies. It’s an incident every year you get this person or that person and this manager and whatever you go, go jump in the conference room and split up your laptops and you pack it out until it’s fixed, right?

Johnathan Hunt 17:43

We’re all distributed, you got people in different time zones. We got people in 17 countries. I mean, it’s 3am for this engineer over here, and I’m sitting here, there’s like noon. He’s like daylight he’s like, in middle the night and, you know, babies crying in the background. And some point is, obviously back to your point without security event with a security incident. You have to jump on that immediately.

Johnathan Hunt 18:05

You never know how significant an event is until you investigate. It could be something very trivial. It could be false positive it could be I mean, we’ve had security incidents called large scale security incidents called where we have 25 people in the room room meeting the Slack channel room, of course. And it turns out being some sort of a job that kicked off like someone forgot that they configured to run, and that’s what was causing, you know, this back is this export this data out to a different server that end up being one of our backup servers. But we’re thinking it’s like our entire customers data set is being extorted off to a server.

Johnathan Hunt 18:42

I mean, can you imagine what’s going through the minds of security when we don’t know that there’s a backup going on? We don’t know there’s a backup server being configured off in a different instance, in AWS instance.

Max Saltonstall 18:56

So we compromised ourself, it’s all good.

Justin McCarthy 19:00

Think of those events as organic drills, rather than needing to instigate a drill some normal aspect of your operations trigger something that you can’t fully explain. So like lesson plans, drill, let’s do the investigation.

Johnathan Hunt 19:13

Yeah, the good thing that comes out of that, though, is that that’s the point when I’m like, Oh, yeah, no, we just proved out a DR Test. That’s amazing. We’re done for the year, right? Like that this check a box, right? Like, that was fantastic.

Max Saltonstall 19:27

So what about when it comes to you in a less urgent form? I mean, do you have people who say, Hey, I found this thing, and it’s not quite right. And I want to let you know.

Johnathan Hunt 19:36

Yeah, that’s great. And that’s something I think we as an industry, right, I think the security industry, and professionals in these roles have to continue to reinforce and educate our teams and our organizations and our software engineers, and whether it’s something we’re talking about in the code, whether we’re talking expanding the scope of that to being some stranger walking around the server room, or something we’ve never seen before. I mean, whether it’s like this security or logical security or you know, source code or whatever, we have to ensure that the company itself feels comfortable and feels rewarded for notifying the security team or notifying your point of contact of suspicious activity or something that doesn’t look right, or some weird executable they’ve never seen before. Or that’s something that we completely endorse, and we want our staff to come to us anytime, day or night with something. I mean, all it takes is one time, all it takes is one breach, one of it. And we could be in the news tomorrow, right? So we do keep a 24 by seven security team, right? It’s hard being remotely distributed and being globally dispersed. And but we do keep an on call engineer at all times ready and willing to accept that that notification, it’s their job then to investigate and determine you know, the severity of that event, or whether that it was a false positive?

Max Saltonstall 20:55

Are there ways for people to bring security concerns maybe do you and your team without it being all hands on deck incident.

Johnathan Hunt 21:03

So there’s a lot of different ways that our teams or anyone in our company can notify the security team, right? If they feel like it’s something urgent they can page us, and that just pages are on call engineer, they can hit up the security team, we have a Slack channel, we have a security email address, so they can notify the team. We also have different ways like a confluence page that they can notify us through, we have like a confluence page that outlines how to report stuff to security. And they can do it that way. We have these built in. It’s like a built in component into Gmail, like a third party party that built into Gmail that they can notify us of phishing attempts or malicious emails or suspicious executable or something. And that’s something that they can do, the easier that you can make it on people, the more likely they’re going to do something, right. If you’re asking them to report something to you, that takes me 15 minutes to do it or sit on hold on a weight line or something. They’re just not going to do it. It’s just not worth it.

Max Saltonstall 21:57

Are internal employees eligible for a bug bounty?

Johnathan Hunt 21:59

That’s a great question. I get asked that all the time. No, they’re not. And that’s because of the incentive a fraud right as because they could create their own bugs, and then they could report them or their buddy can create the bug it and they can report them. And our bug bounty pays out quite handsomely. Right? We got p one securities that could pay out up to $2,500.

Justin McCarthy 22:19

That’s a little bit of a cool meta ethics detector, though.

Johnathan Hunt 22:22

It is right? It is have you turned it on just for a short while and see who’s reporting. As far as the reporter 13 vulnerabilities that happens to be his repo has go That’s amazing. So that’s a good question. We don’t at this time. I know other companies do though.

Max Saltonstall 22:37

I’ve seen some companies that have time dedicated as a fix it right? If we’re going to kill a lot of bugs, usually it’s bug that are already known but haven’t been addressed for one reason or another. And then they’ll have some prizes or some incentives for who has made the most progress in this fixed time window. But I’m curious about your approach to getting external notification of security problems. And how do you use that to make yourself more secure, rather than just inviting people to attack you.

Johnathan Hunt 23:01

So there’s a couple different things I think we do. I’m going to start by answering this Max, if it’s not what you’re looking for back me up. And we can take another approach at it. In terms of like external notifications. Apart from a bug bounty program, obviously, our entire team subscribes to a number of different feeds. And we also have some threat intelligence feeds that are built into slack. One of them that we pay for that is supposed to be like cutting edge and will give us the latest on vulnerabilities detected or you know, they supposedly gather some information from the mysterious dark net and supposed to notify us of potential indicators to a new malware or something that might affect our systems.

Johnathan Hunt 23:43

So we do rely heavily on some of that information. In addition to the bug bounty, I don’t know, if we have I’m trying to think of like what else we might do from an external perspective, in terms of extra notification as well. Another thing that we do, and again, kind of tied to the bug bounty is that we do have a security page on envision that outlines everything we do in terms of like secure and your product is securing your data and securing your code. Obviously envision as a design platform, we do design and prototyping, collaboration, 3d modeling, we do all this stuff in the design space, right.

Johnathan Hunt 24:18

So we’ve got a heavy presence on our marketing page and our support site outlining a bunch of stuff that we do on the security front, which is obviously very valuable to our enterprise, private cloud customers, as well as our self serve customers. But there we also ask people to report anything within the product, you know, something data shows up that wasn’t theirs, or they’re not familiar with, or something’s within the practice acting funny and a suspect it’s whatever, right or, and we also invite researchers through our web page as well to not only report stuff to us through our ticketing system or support desk, but also we also give them a link to the bug bounty program and given the opportunity to report stuff in through there.

Justin McCarthy 24:56

So we’ve spoken a bit about bug bounties and about some internal practice is your team deploys to achieve secure code development. We also talked a bit about pen tests. And I’m actually wondering if you think of those as layers, you think of the internal practices, the secure code development practices, let’s say a penetration tests that or possibly a, let’s say, a naive penetration test, right? So not a persistent threat, but like the kind of get in a couple days. And then the bug bounty program, if you think of those three defensive layers, what kind of problem would you expect to not be revealed by a pen test that would be revealed through just secure code practices or not be revealed by security code practices, but would be found by a bug bounty. So can you think of the kind of finding that you might arrive at from each of those buckets.

Johnathan Hunt 25:39

So I think a pen test is going to give you an immediate return on an initial discovery and initial advanced level of penetration testing, or what would be like a gray box testing or malicious hacking, or whatever you want to say, is supposed to represent, you know, real world scenarios where they start off with like a discovery and a scan. And then they start digging in with some automated tools and do some manual testing on your sites or your services, or your network or whatever you’re having tested. And it’s going to give you immediate results within a few days of what they can discover and about roughly 40 hours worth of work, right? So they’re going to catch certainly the villa line fruit, right? T

Johnathan Hunt 26:21

hat’s the stuff that’s just going to stand out. And it’s going to be super obvious to apps like professionals, and it’s going to give you something to act on right away. As far as bug bounty, though, bug bounty is a much more sophisticated approach that attackers will take, right. And this is why this is so much more important, so much more valuable than a pen test. For the bug bounty program, you’re going to get sophisticated and well experienced individuals that you inviting across the world, you’re not depending on a consulting firm that has a couple pen testers, or even if they have 100, pen tests, even if they have 500 pen testers, you’re going to get assigned to one and that person is going to be good at some things and not good at others, right?

Johnathan Hunt 26:55

A bug bounty program, you’re inviting the entire world to attack your site. And by the way, you you’re not obviously using production, right, you’re spinning up a clone that has no customer data. And it’s not even connected to your network. And I mean, do it the smart way, right? This is people don’t quite understand this sometime. But do it the smart way. And let them have a go at it right. And you’re going to get someone over in Europe, you know, the Eastern Bloc, that’s going to be amazing at like Dom based access s or something, right, they’re going to just crawl your app for 17 hours looking for that to submit, you know, vulnerability, you’re gonna get these experts all across the world that are highly educated, highly intelligent, highly experienced in certain areas of security, and you’re going to get the best of the best hitting your site, each of those areas, looking for those vulnerabilities.

Johnathan Hunt 27:39

Also, pen tests rarely perform lateral movements across your app, right? Most times are using automated tools, it’s going to hit that network edge is going to hit the application surface, it’s going to hit whatever right looking at IPS, very rarely laterally moves across your network, even if they are able to compromise look at this point. And it gives you like a root prompt, whatever. That’s it, that’s the end of test. Whereas a bug bounty, those people can continue to move and infiltrate your network and give you a string of attacks that they were able to finally polling your data, right pony your world, basically, secure code development, right in terms of that, that’s going to give you a lot more returned in a pen test. By far right, that’s going to do deep scans application or static and dynamic application scans, it’s going to run continuous, it’s going to run locally, you can run it in the pipeline, you can run a weekly, I get a pen test this once a year, this is something that could run every day for the entire year and give you immediate results right away.

Justin McCarthy 28:33

Send us off with some closing thoughts.

Max Saltonstall 28:35

If someone came to us saying, I’m just starting my journey, I’m new to this security world. I’ve been making software, but I want to not be a liability, what do they do.

Johnathan Hunt 28:46

So there’s a couple different approaches there, I want to leave you with two final thoughts that I think might be valuable for a newcomer, or even someone moderately experienced and kind of like trying to dig in deeper into this world of secure code development. For the developer themselves, I would say you have to get educated on the way vulnerabilities are developed and exploit it and the way they look.

Johnathan Hunt 29:06

And a great resource for that is obviously a wasp, you’ve heard of the hundred times, but go to their website, check them out, there’s a lot more of the websites, but go dig into that website. Look through all the material they offer. They get full explanations of what things are and how to remediate them, and they give you top vulnerabilities and everything like that. So I would definitely go and check our website. If you’re a security professional, and you’re looking for ways to identify vulnerabilities within your code immediately right away, I would get an application like a dynamic or static application scanner, I would spin it up within five minutes, you can immediately scan your code again, highlight the immediate vulnerability is the low laying fruit are going to be the most critical to your organization. That’s something you can get exposed to right now. Like within 10 minutes, you can have exposure to these things that can cause a breach to your network today to your customer data, right. So I would start with that and then work on reinforcing education and good practices to you’re out your software development staff.

Justin McCarthy 30:02

Alright, so thanks to Jonathan hunt for joining us today. That was a great discussion. I feel educated.

Johnathan Hunt 30:06

I appreciate being here, everyone. Thanks for having me. It’s been a pleasure.

Max Saltonstall 30:10

Thanks very much. Thank you.

Next Steps

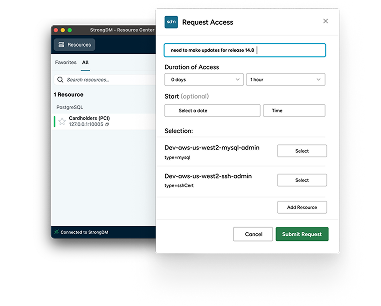

StrongDM unifies access management across databases, servers, clusters, and more—for IT, security, and DevOps teams.

- Learn how StrongDM works

- Book a personalized demo

- Start your free StrongDM trial

About the Author

Schuyler Brown, Chairman of the Board, began working with startups as one of the first employees at Cross Commerce Media. Since then, he has worked at the venture capital firms DFJ Gotham and High Peaks Venture Partners. He is also the host of Founders@Fail and author of Inc.com's "Failing Forward" column, where he interviews veteran entrepreneurs about the bumps, bruises, and reality of life in the startup trenches. His leadership philosophy: be humble enough to realize you don’t know everything and curious enough to want to learn more. He holds a B.A. and M.B.A. from Columbia University. To contact Schuyler, visit him on LinkedIn.

You May Also Like